by artanim | Oct 29, 2025

Project Info

Start date:

January 2025

End date:

December 2026

Funding:

–

Coordinator:

Artanim

Summary

Imagine yourself inside a museum environment, whether real or entirely virtual. The elegant space is filled with fascinating works of art, some which you immediately recognize, but who was the artist again? And others grab your attention but are entirely unfamiliar. If only someone was around to tell you about the artists behind the works of art or provide you with details on the works themselves. Thankfully a virtual museum guide is in the room with you.

One of the drawbacks of more traditional approaches to such a museum guide scenario is the need to establish all the possible questions and responses up front, quickly leading to intractable production constraints, and an end-result that while informative may feel unnatural or robotic given that it’s entirely predetermined. The goal of our CurateXR project is to study how a modern-day generative AI chatbot backed by a Large Language Model (LLM) can be used to provide a real-time and entirely natural means of interacting with a virtual agent in such virtual (VR) or augmented reality (AR) scenarios. Powered by an animation system making use of Motion Matching and pathfinding to naturally navigate the space, the guide will happily stroll along with you.

All interactions happen through natural speech. Just ask any question you may have, and the guide will reply without delay, taking into consideration the context of the space it has been provided with up front, as well as real-time contextual clues provided to it such as your location and the artwork you’re looking at. Not only will the guide engage in a natural conversation with you, but if you feel more comfortable speaking in another language, just ask him to do so. The use of a generative AI chatbot resolves many issues around the barriers to entry users may feel when interacting with extended reality applications for the first time, and it provides a level of accessibility which is hard to replicate otherwise.

Generative AI chatbots aren’t just limited to conversations. They have the ability to turn your words into concrete actions. To demonstrate this, at one end of the museum space you will find a virtual sculpture experience, controlled by another agent. Through natural speech you are not just able to summon three-dimensional shapes in your desired color, but you can have them move as you desire. And if you’re not satisfied with the results you achieved, there is no need to start from scratch. Just inform your virtual helper about what changes you would like to make, and you’ll see the relevant updates happen before your eyes.

Integrating a generative AI chatbot into your VR scenarios can level up your experience by providing an entirely natural means of engagement. Just put your headset on and go. And while the museum scenario was chosen as the use-case for this project, the potential range of scenarios that can be supported – from training and education to rehabilitation, entertainment and many more – is virtually endless.

by artanim | Jul 16, 2025

Project Info

Producer:

Dreamscape Immersive / Artanim

Year:

2025

Summary

Your Dreamscape experience is riddled with bugs! These dreadful digital pests might very well eat through everything they see, and you’re the only one standing in their way. Hang on to your speedster as you launch through the rifts at full speed, and explore many corrupted worlds! Set a course for the asteroid field, join forces in a post-apocalyptic desert… and above all, stay alert: a well-deserved break could be the perfect grounds for an ambush. Keep your lasers hot, aim for the highest score, and DEBUG. THIS. MESS!

In this new opus, Artanim collaborated with the team of Dreamscape Immersive to create a new free-flying VR immersive experience combining a subtle balanced mix of storytelling and gaming with a splash of humor.

Permanently exhibited at the Dreamscape center in Geneva, level-1 of Confederation Center. Check https://dreamscapegeneva.com/ for more information and to get tickets.

More information on the VR technology here.

Credits

Production, project direction, scenario, 3D content creation, gameplay and VR platform

Dreamscape Immersive / Artanim

Music

Alain Renaud

Voice actor

Adrien Buensod

by Marlène Arévalo | Jan 22, 2025

Project Info

Client:

Alan Bogona

Year:

2024

Summary

The experimental video project Being Laser – Restless Limbs explores the interactions between light, human body, and technology. Synthesizing speculative research that combines contemporary utopias and ancient archetypes related to artificial lighting, it highlights the cross-influences between Western and East Asian cultures. This project combines computer-generated animated sequences (CGI) with real footage in China, Switzerland, and Italy. The animated sequences feature ethereal, luminous, and ever-changing avatars, brought to life using motion capture techniques.

Credits

Indoor skydiving performers

Benjamin Guex, Olivier Longchamp (RealFly Sion)

Butoh dancer

Flavia Ghisalberti

Motion capture

Artanim

Support by

Pro Helvetia

by artanim | Jul 23, 2024

Project Info

Start date:

September 2024

End date:

August 2028

Funding:

Swiss National Science Foundation (SNSF)

Coordinator:

HUG – Division of Radiology

Summary

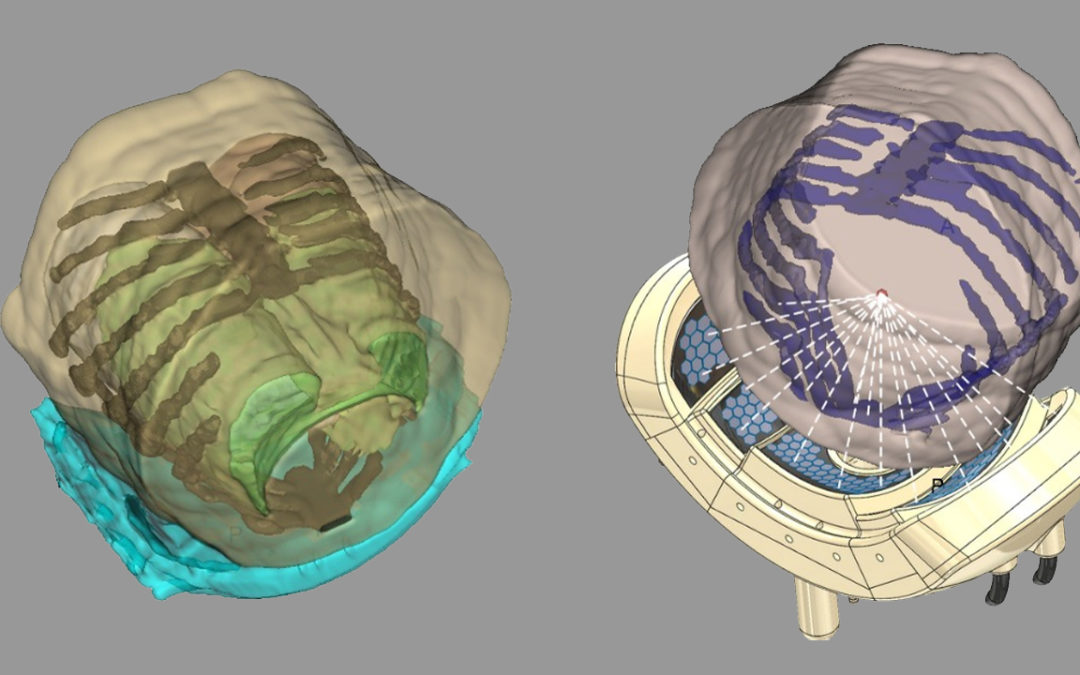

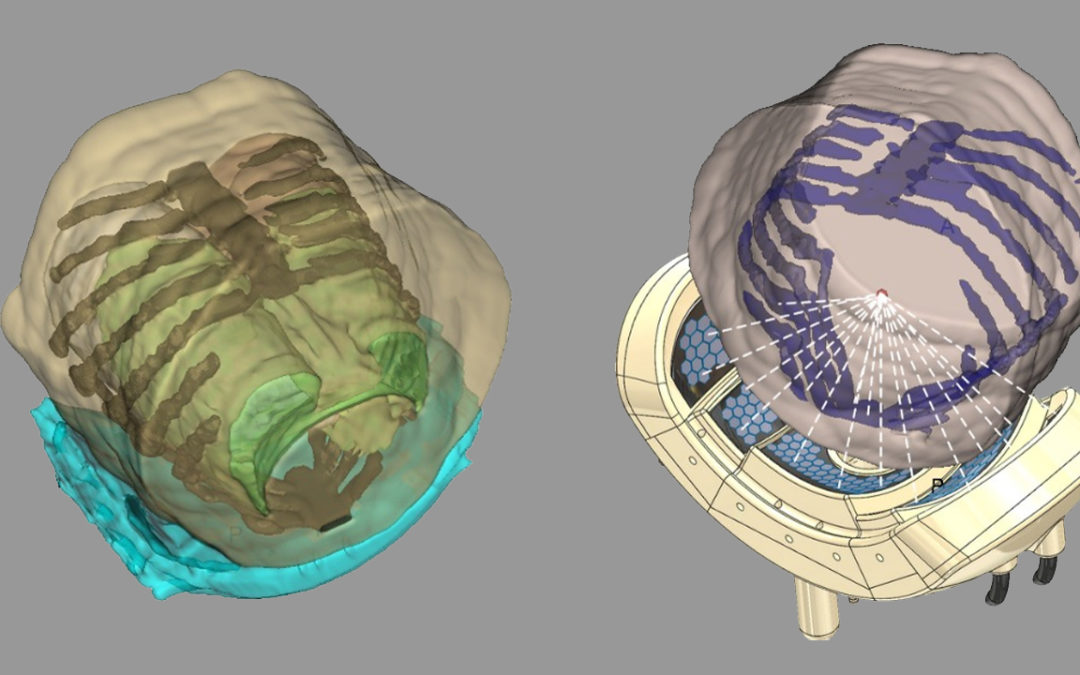

High intensity focused ultrasound (HIFU) is a precise method to thermally ablate deep-seated tumors in a non-invasive manner. A prerequisite for a safe and effective application of HIFU is image guidance, to plan and control the ablation process. The most suitable imaging modality is MRI, with its high soft tissue contrast and its ability to monitor tissue temperature changes (MR-guided HIFU, MRgHIFU). The therapy of abdominal organs, such as the liver, still poses several problems due to a moving target location caused by breathing, motion related MR-thermometry artefacts and near-field obstacles, i.e. thoracic cage or bowel.

The primary objective of this project is to perform clinical trials with MRgHIFU applied to liver neoplastic nodules. The project relies on a new concept of MRgHIFU ultrasound developed in a previous SNF project which will be tested for the first time on patients. The clinical studies will meet incremental requirements in liver from basic targeting to complete tumour ablation.

In this project, Artanim is the expert in computer science, involving the design, implementation, and quality assurance of real time software for integration of “self-scanning” sonication with closed loop temperature feedback control.

Partners

University Hospitals of Geneva – Division of Radiology

Imaging, data analysis, transducer development, clinical tests and project coordination

University Hospitals of Geneva – Division of Oncology

Clinical trials

University Hospitals of Geneva – Department of Surgery

Liver surgery

Artanim

Design, implementation and quality assurance of real time software for integration of “self-scanning” sonification