by artanim | Jul 6, 2019 | 3D scanner, Real time interaction, Virtual reality

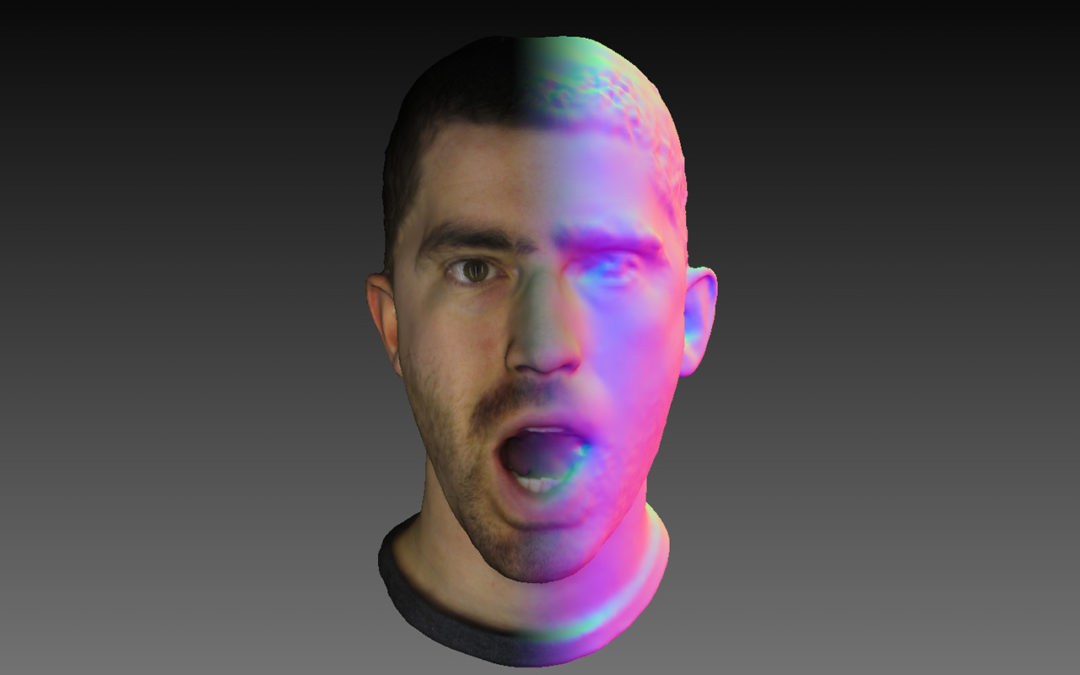

At the heart of the VR-Together project lies the objective to enable social VR experiences with strong feelings of immersion as well as co-presence. To achieve this strong social sense of sharing a space together, photorealistic real-time representations of users are used, rather than relying on abstract avatars as found in such offerings as Facebook Spaces or AltspaceVR. Using state-of-the-art technologies developed by consortium partners and off-the-shelf hardware such as Microsoft Kinect or Intel RealSense sensors, users are scanned in real-time and the captured representations are processed and streamed as point clouds or time varying meshes (TVM). These approaches to user representation, combined with HMD removal technology, allow users sharing the virtual space – while in geographically separate locations – to see each other in all three dimensions.

Early feedback from users of the Pilot 1 demonstrations regarding the ability to see themselves and others, has been positive. The question still remains however, whether or not accurate self-representation has a significant positive impact on your sense of immersion, co-presence and the overall quality of experience. Both when seeing yourself as well as when interacting with others sharing the same virtual environment with you.

To answer this question, VR-Together consortium partner Artanim will this summer run an experiment in which users will be placed in a virtual environment in which they are virtualized by a representation of themselves at varying levels of realism and likeness.

User representations will be created at 3 different levels of accuracy:

- An abstract avatar-like representation which does not match the participant

- A realistic representation of the participant

- An in-between more abstract – perhaps cartoon-like – representation of the participant, which is still recognizable, but steers clear of such undesirable effects as the “Uncanny Valley”.

To evaluate self-representation, single users will be placed in a virtual environment in which, by means of a virtual mirror, they will be able to observe themselves. The question there is whether or not an increased likeness improves the overall VR experience. To evaluate the importance of avatar likeness in the representation of others, pairs of users who know each other (i.e. friends or family) will share a virtual environment together, again being represented at varying levels of likeness. The goal there is to understand the effects on such aspects as immersion, togetherness and quality of interaction.

The proposed experiment will help us better understand what scenarios benefit most from realistic and recognizable user representation in Virtual Reality experiences, and to what extent realism is desirable in social VR.

by artanim | May 15, 2018 | 3D scanner, Vicon, Virtual reality

Artanim collaborated with Entropy Studio on the shooting of the first pilot of the VR-Together project. After a short flight from Madrid to Geneva, the actors were 3D scanned with our photogrammetric scanner consisting of 96 cameras, to obtain the 3D surface of their body. Steve Galache (known for his work on The Vampire in the Hole, 2010; El cosmonauta, 2013; and Muertos comunes, 2004), Jonathan David Mellor (known for The Wine of Summer, 2013; Refugiados, 2014; and [Rec]², 2009) and Almudena Calvo were the main characters of this first experience. They were dressed the same way as in the shooting scene.

The shooting was split over two days. The first day was dedicated to shoot the actors in costumes on a complete chroma background with a stereo-camera. This will allow the creation of photoreal stereo-billboards that will be integrated in a full CG-environment. The second day of shooting was focused on full performance capture of the actors. Each equipped with 59 retro-reflective markers and an head-mounted iPhone X, the actors were able to perform the investigation plot (an interrogatory scene) with success. These data will later be used to animate the 3D models of the actors generated from the 3D scans. These full-CG models will be finally integrated in the same virtual environment.

This pilot project will thus offer two different rendering modalities for real actors (stereo-bilboard and CG characters). The impact of both techniques will be studied through user studies with an eye on social presence and immersion.

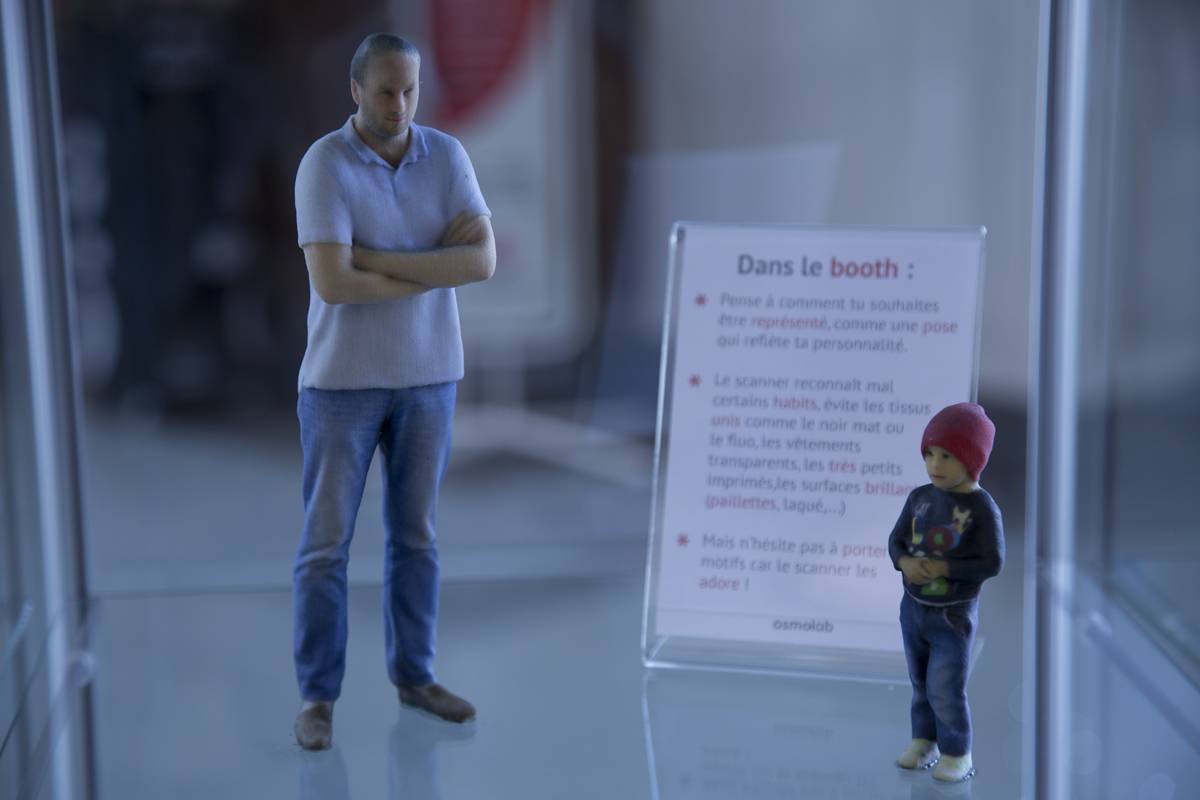

by artanim | Nov 28, 2014 | 3D scanner

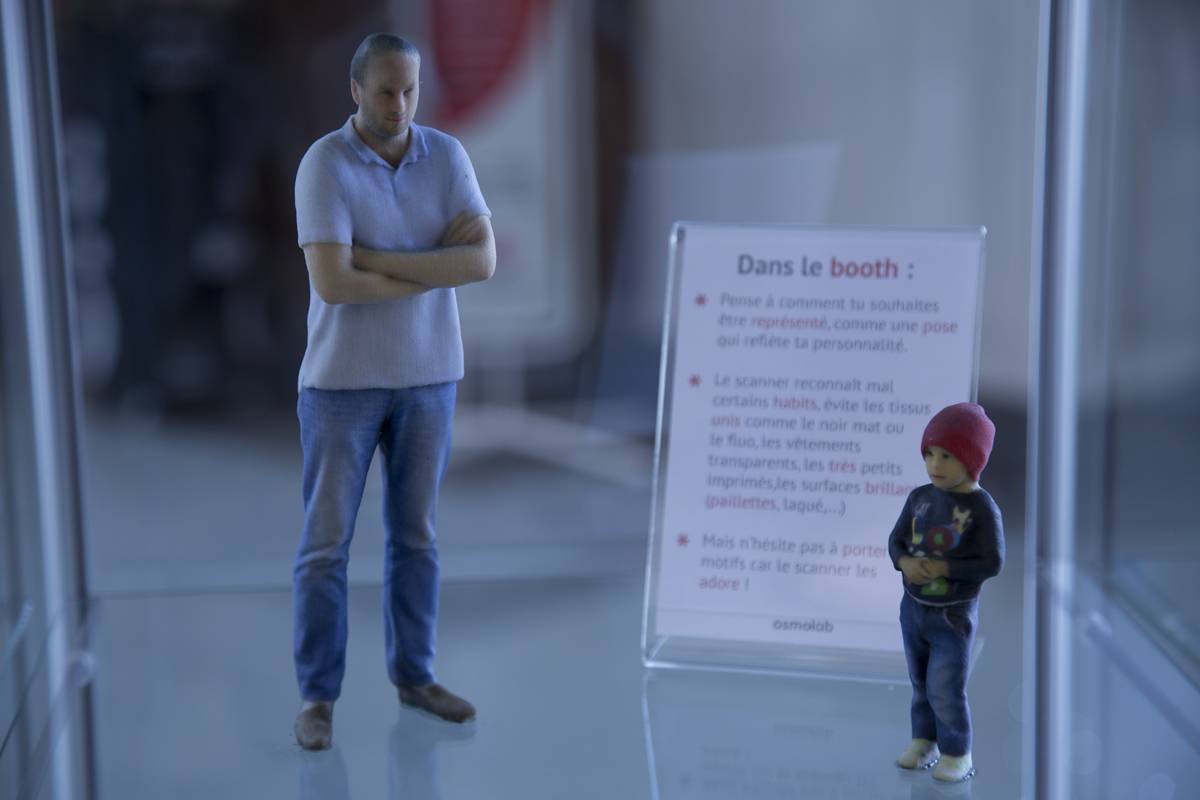

Are you dreaming about cloning yourself in 3D? This is now possible by visiting the center Coop Bassin in Conthey (VS) thanks to the osmo-lab initiative. The photogrammetric scanner developed by Artanim is installed until December 6 in order to scan your body and print your 3D figurine. The local press talks about it.

And you, what have you planned as a gift for Christmas?

by artanim | Feb 27, 2014 | 3D scanner

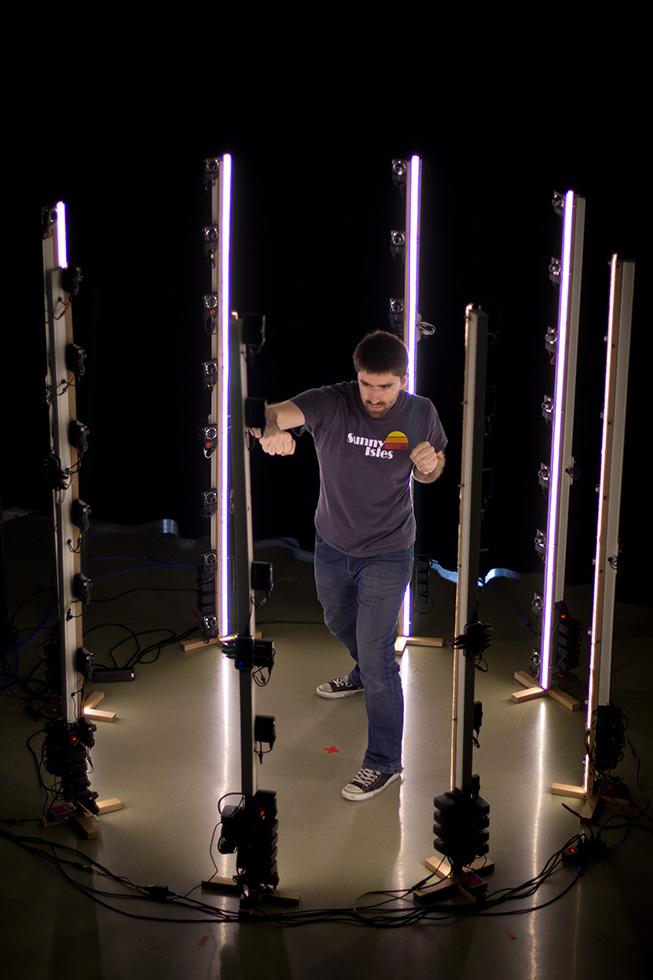

So you have an animation and simulation project, and want to scan people to get their high-resolution 3D meshes. At that point you have wide variety of different options based on different capture technologies, big bulky machines, hand-held devices and scanners that can take quite a while to obtain a scan. So what do you do when you don’t necessarily have a lot of permanent space, when you ideally want to obtain a scan in a single shot, and you want to do all that on a budget? Well, you build your own solution of course.

So you have an animation and simulation project, and want to scan people to get their high-resolution 3D meshes. At that point you have wide variety of different options based on different capture technologies, big bulky machines, hand-held devices and scanners that can take quite a while to obtain a scan. So what do you do when you don’t necessarily have a lot of permanent space, when you ideally want to obtain a scan in a single shot, and you want to do all that on a budget? Well, you build your own solution of course.

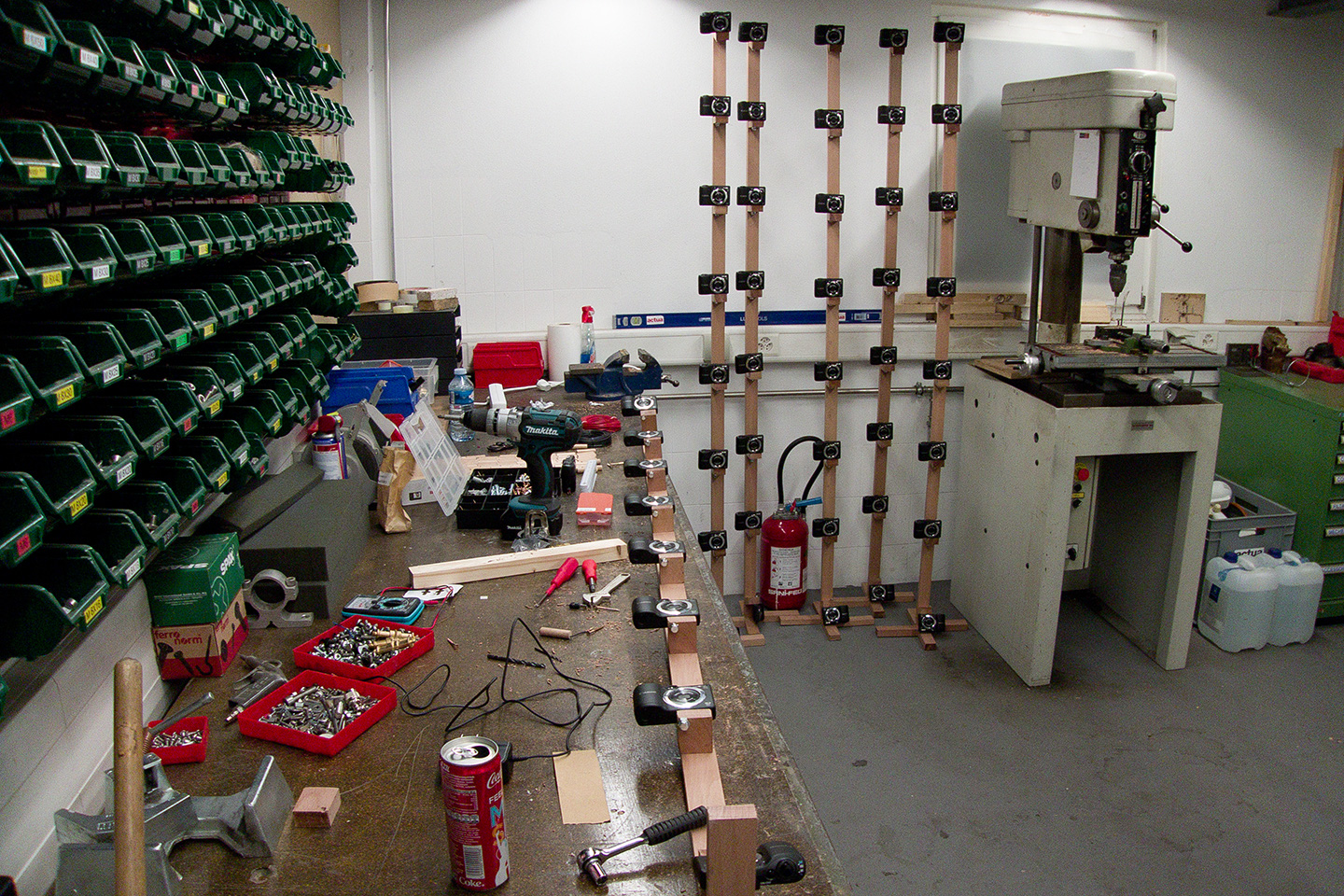

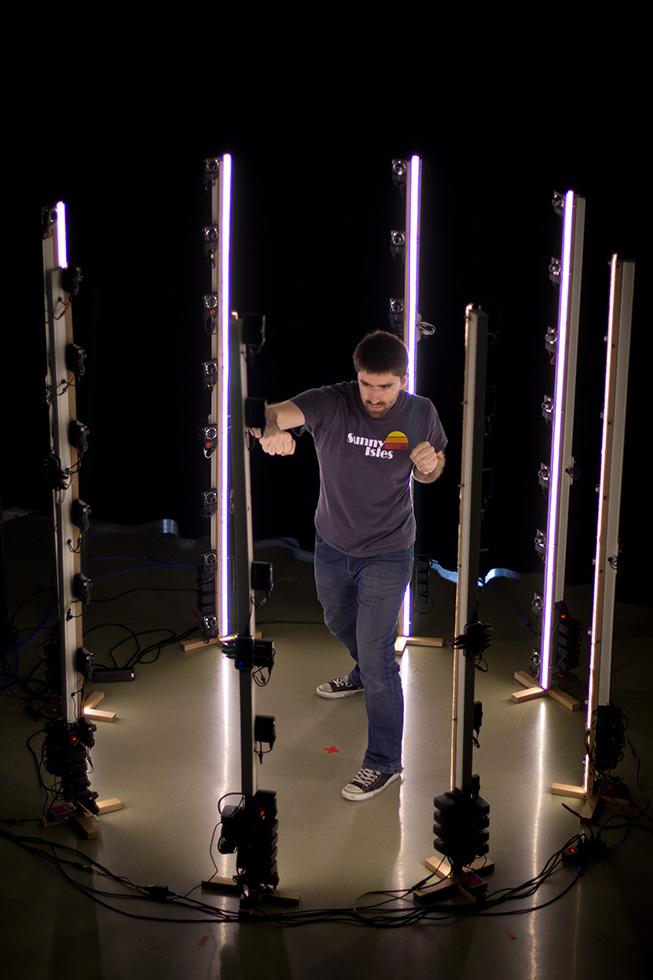

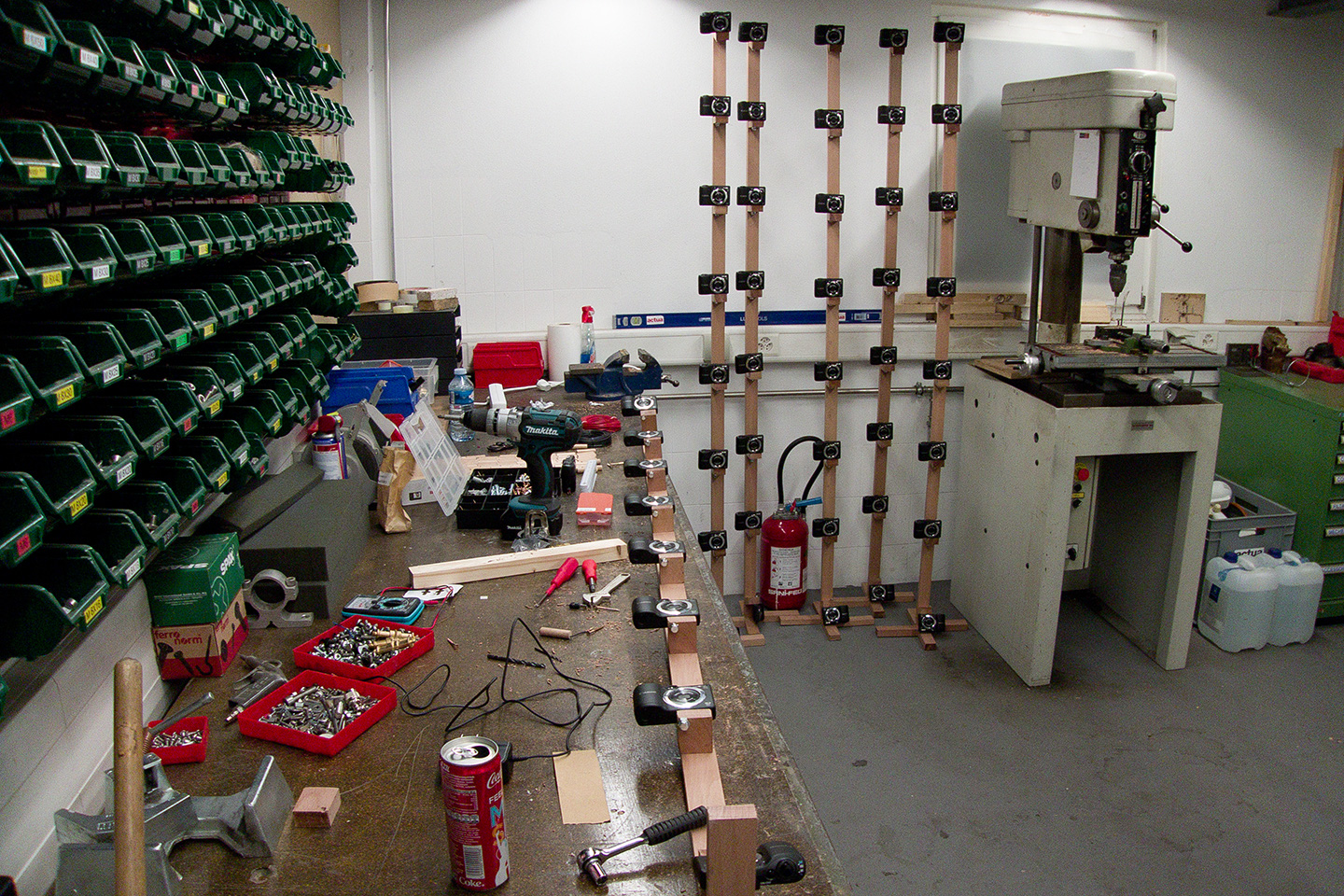

You visit your local camera vendor, and empty their basket of 64 Canon Powershot A1400 cameras, which should suffice to build 8 portable 3D scanning poles. Throw in a significant amount of USB cables, add some USB hubs, grab some wood and hardware from your local DIY store, add some bright LED strip for lighting, and you have all the ingredients necessary to build your own scanner.

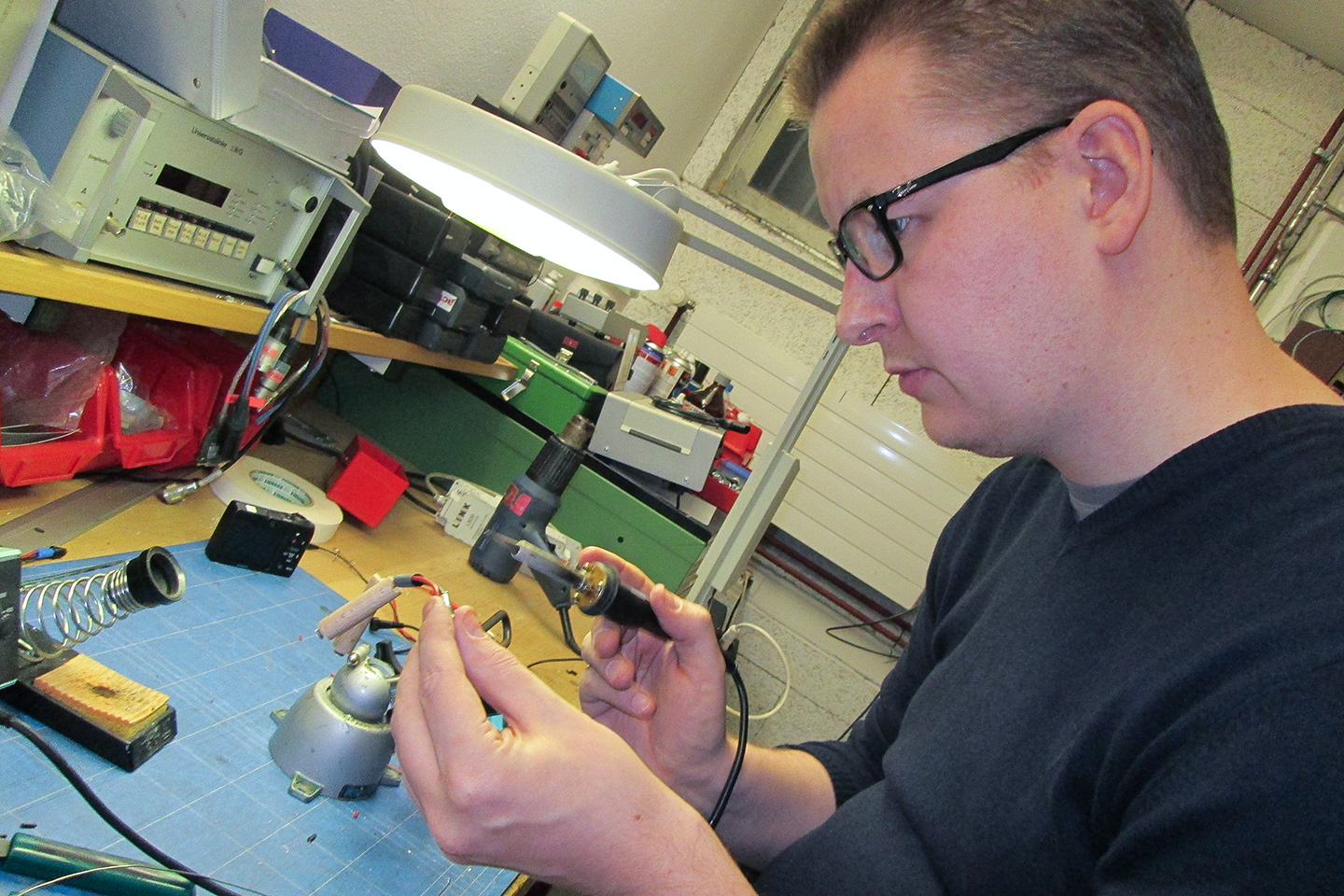

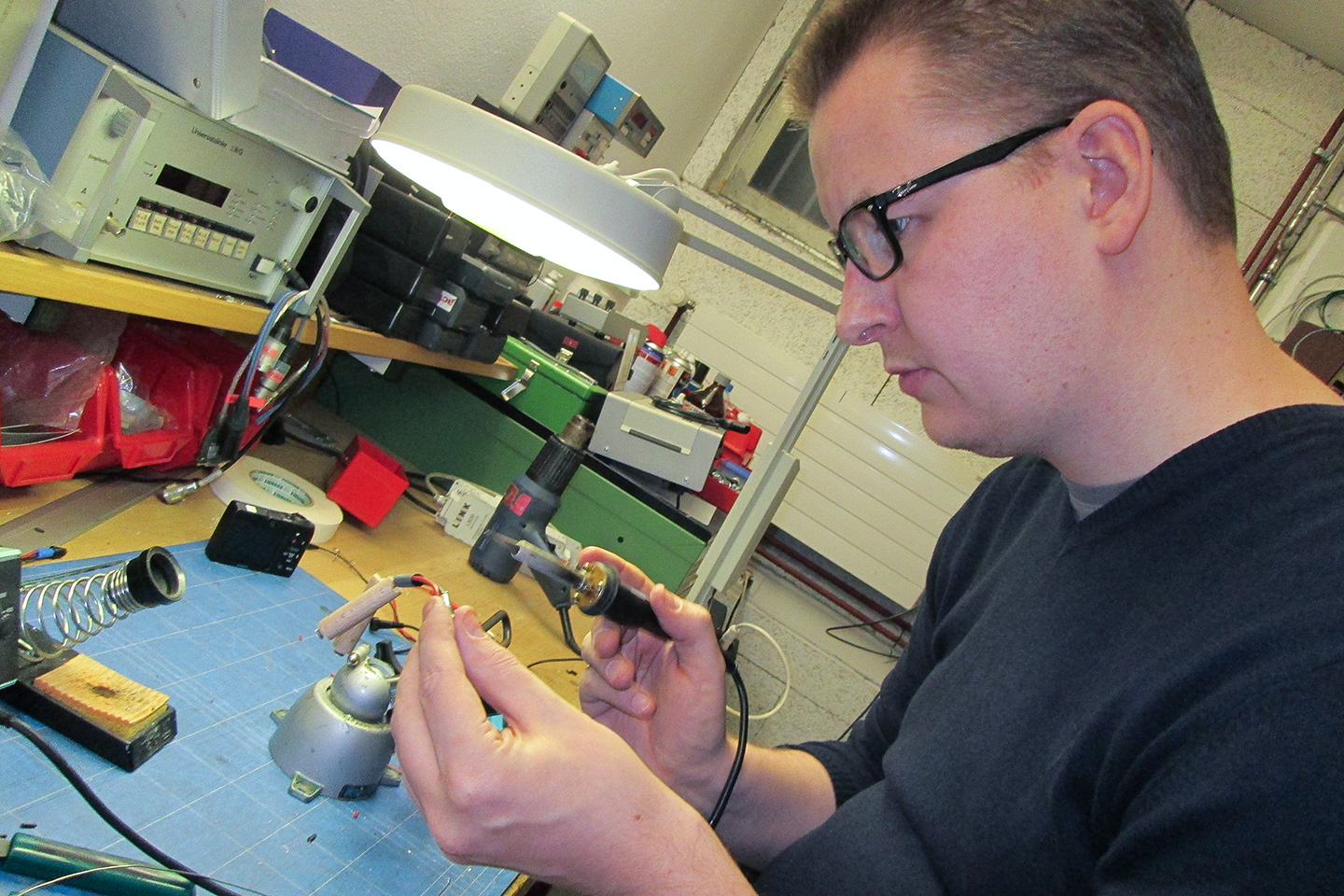

Being the efficient people that we are, the idea of having to charge and replace the batteries for 64 individual cameras at each session did not sound like the most effective solution. So external power it is. Unfortunately the official adapter comes at a cost. And with us not being all that patient when it comes to seeing results of our labour, an ordering time of several weeks made us wonder “If we’re building the whole thing ourselves, surely we can make our own external power supplies as well”. And as soon as we have such thoughts, a “battery” assembly line springs to life, wiring up the wooden batteries and soldering the power-supply connectors.

The construction:

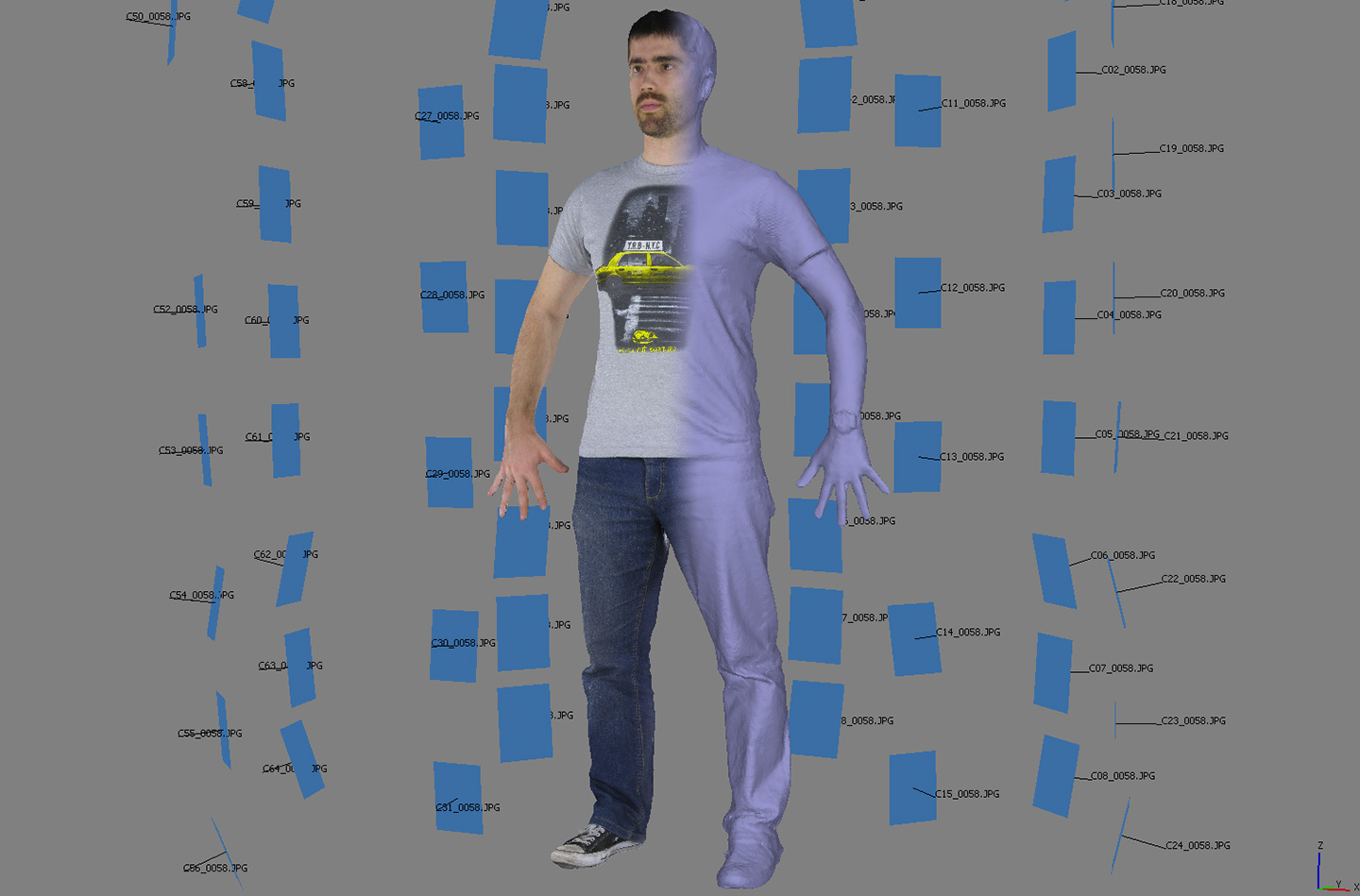

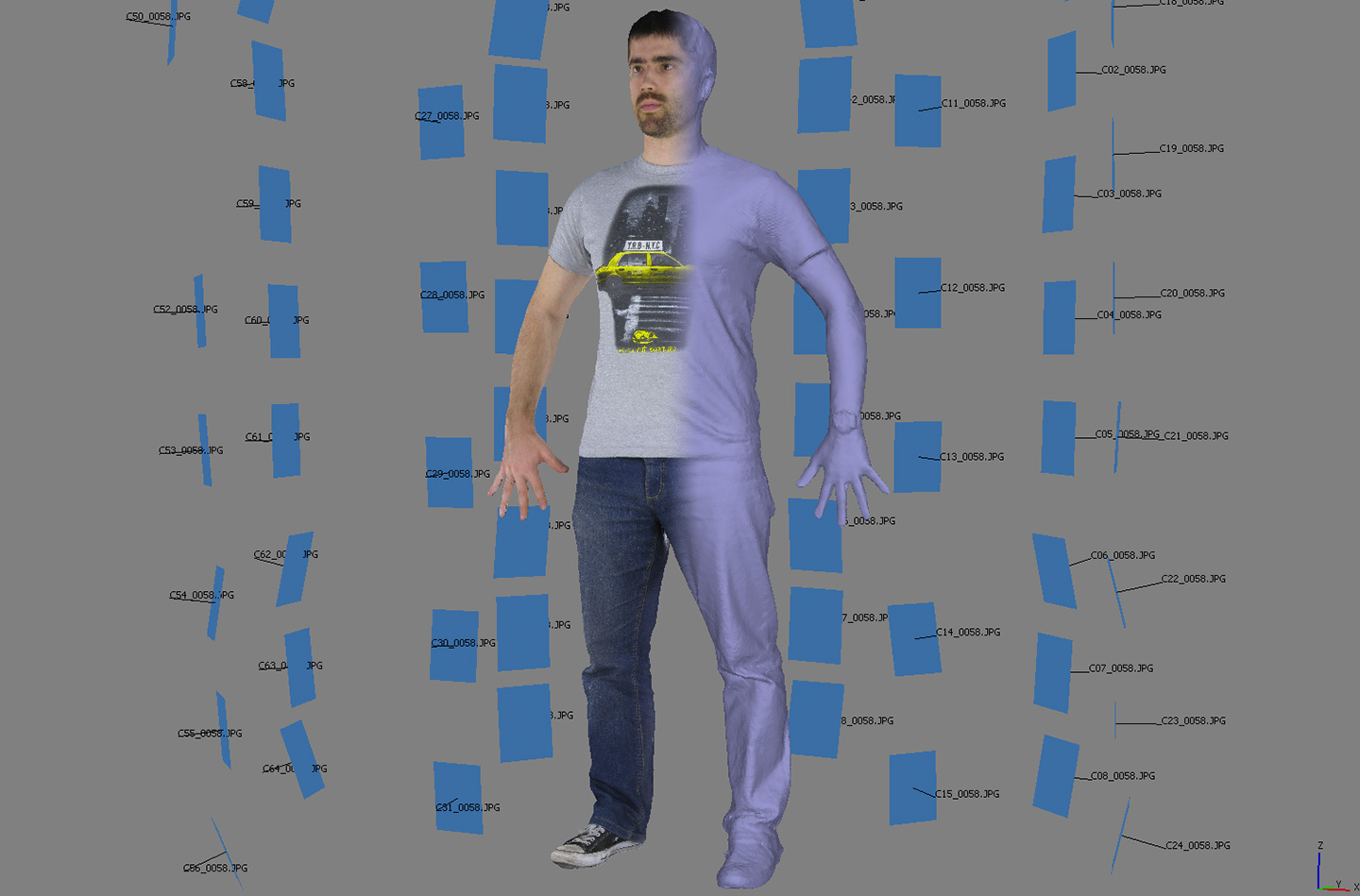

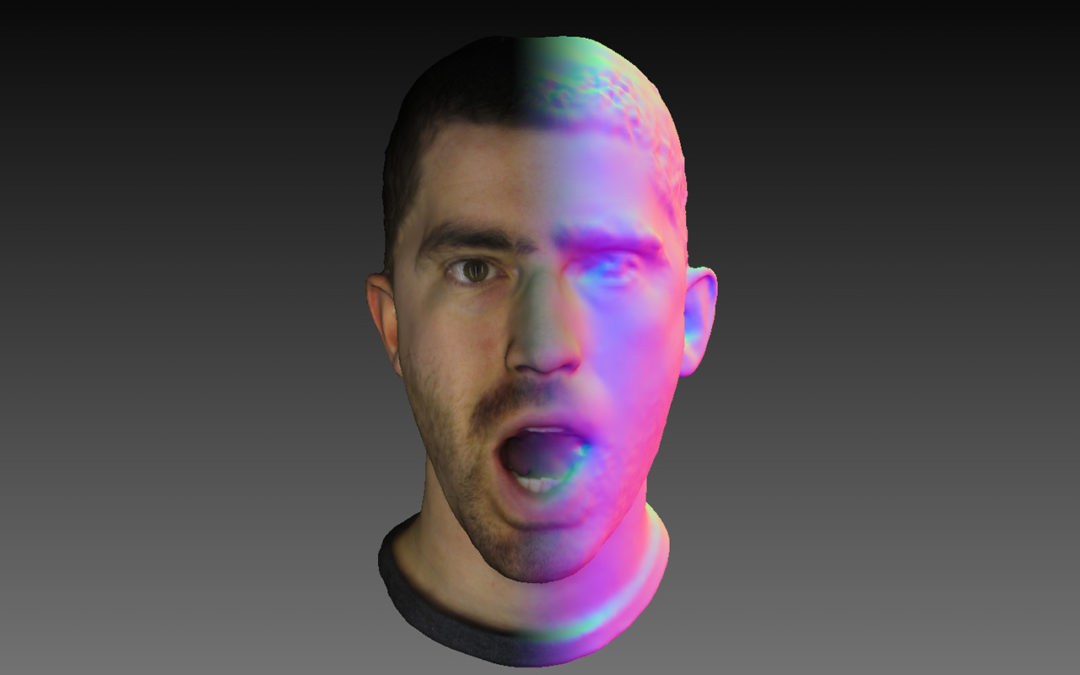

On the software side the Canon Hack Development Kit (CHDK) allows us to control all 64 cameras using custom scripts. With all cameras pretty much synchronized, we don’t have to worry too much about minor movements of the scanned subject, as long as the shutter time is fairly short. The actual capture process has completed in the blink of an eye. Processing the captured images into a 3D mesh and a clean texture uses Agisoft PhotoScan software, and requires only minimal manual tweaks. For animation some additional cleanup and remeshing might be necessary in other software, but the raw results obtained are already very detailed and usable.

And there you have it. Eight very portable and storable scanning poles, allowing us to capture bodies or objects at a sufficiently high resolution and in a short time.

The first results:

So you have an animation and simulation project, and want to scan people to get their high-resolution 3D meshes. At that point you have wide variety of different options based on different capture technologies, big bulky machines, hand-held devices and scanners that can take quite a while to obtain a scan. So what do you do when you don’t necessarily have a lot of permanent space, when you ideally want to obtain a scan in a single shot, and you want to do all that on a budget? Well, you build your own solution of course.

So you have an animation and simulation project, and want to scan people to get their high-resolution 3D meshes. At that point you have wide variety of different options based on different capture technologies, big bulky machines, hand-held devices and scanners that can take quite a while to obtain a scan. So what do you do when you don’t necessarily have a lot of permanent space, when you ideally want to obtain a scan in a single shot, and you want to do all that on a budget? Well, you build your own solution of course.