Real Virtuality

Real Virtuality

Immersive platformProject Info

Start date:

April 2015

End date:

–

Funding:

–

Coordinator:

Artanim

Summary

Artanim is the inventor and continuously develops since 2015 the VR technology driving Dreamscape Immersive. This multi-user immersive platform combines a 3D environment – which can be seen and heard through a VR headset – with a real-life stage set incorporating haptic feedback elements.

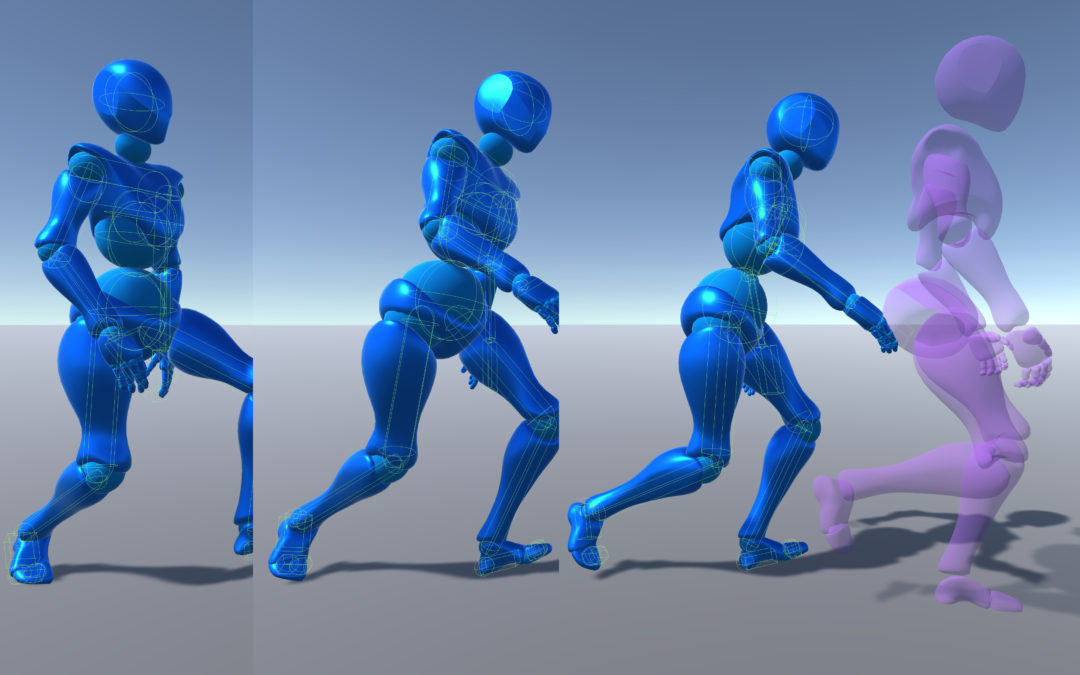

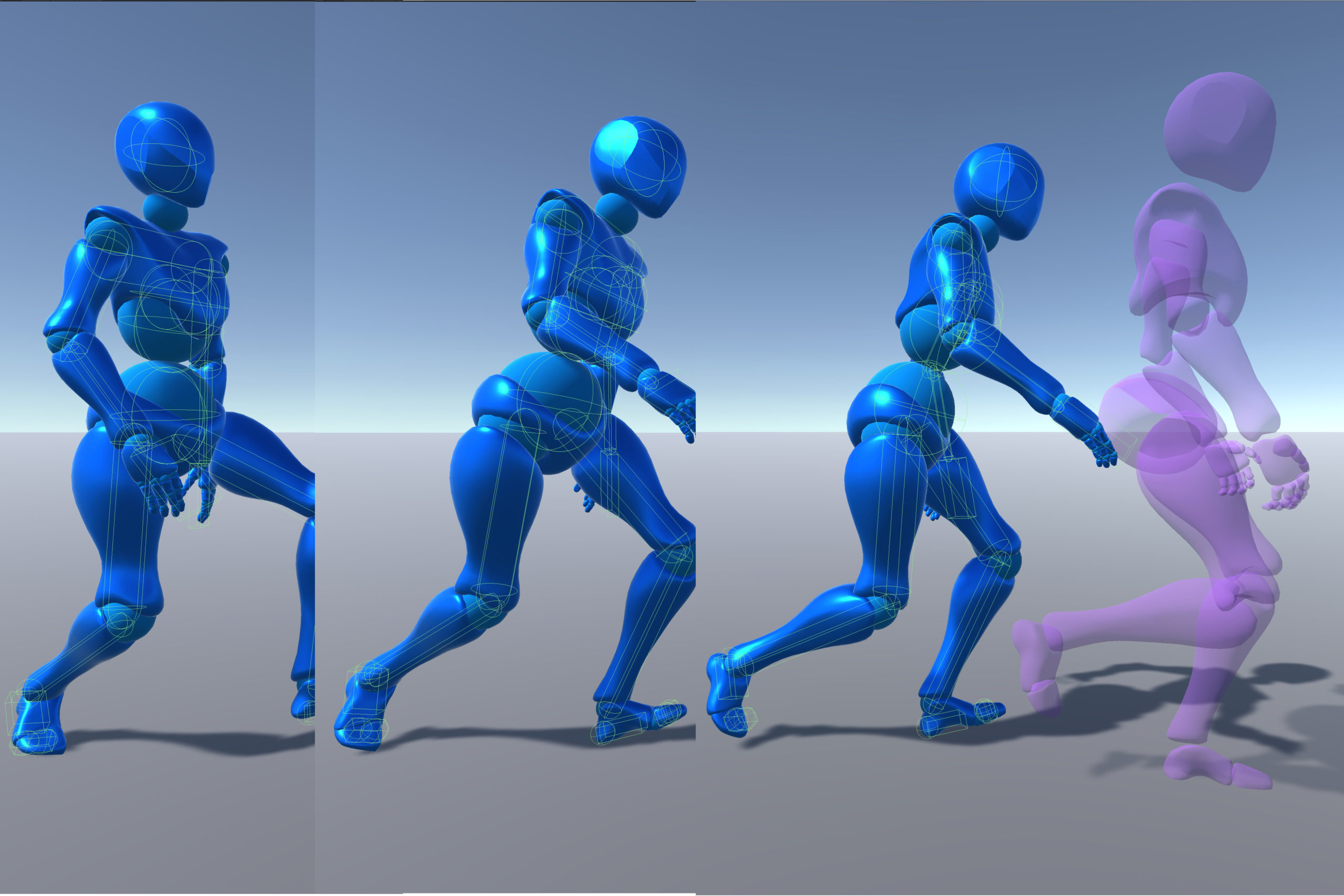

The user’s movements are sparsely captured in real time and translated into a full-body animation thanks to a deep understanding of body mechanics. Contrary to other static position VR systems, the platform allows up to eight users to truly feel immersed in a VR scene. They are rendered as characters (avatars) inside a computer generated world where they can move physically, interact with objects and other players, and truly experience worlds previously accessible only in their imagination. The bodies of the users thus become the interface between the real and virtual worlds.

The platform combines the following features:

- Wireless: complete freedom of movement across large spaces.

- Social: interactive multi-user experiences within a shared environment or across connected pods.

- Accurate: full-body and physical objects tracking with less than 1 millimeter deviation.

- Real-time: zero discernible lag eliminating most concerns of motion sickness.

- Flexible: SDK allowing content creators to easily create experiences for this particular platform.

This platform is leveraged by Dreamscape Immersive through their worldwide location-based VR entertainment centers, as well as through educational and training programs and other enterprise solutions.

The platform was also used to produce VR_I, the first ever choreographic work in immersive virtual reality, as well as Geneva 1850, a time traveling experience and historical reconstruction into the Geneva of 1850.

Related Publications

Charbonnier C, Trouche V. Real Virtuality: Perspective offered by the Combination of Virtual Reality Headsets and Motion Capture, White Paper, August 2015.

PDF

Awards and Recognitions