Char4VR

Char4VR

Interactive characters for VR experiencesProject Info

Start date:

September 2020

End date:

December 2023

Funding:

–

Coordinator:

Artanim

Summary

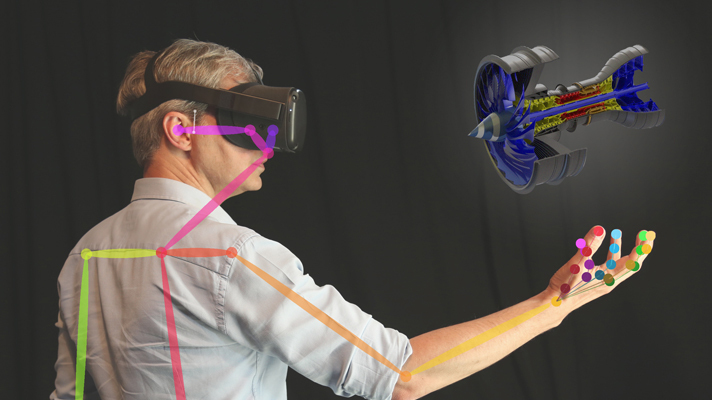

Virtual Reality (VR) opens the possibility to develop a new kind of content format which, on one hand, is experienced as a rich narrative, just as movies or theater plays are, while on the other hand, it allows interaction with autonomous virtual characters. The experience would feel like having different autonomous characters unfold a consistent story plot, just as a movie or a theater play does, within an interactive VR simulation. Players could choose to participate in the events and affect some parts of the story, or just watch how the plot unfolds around them.

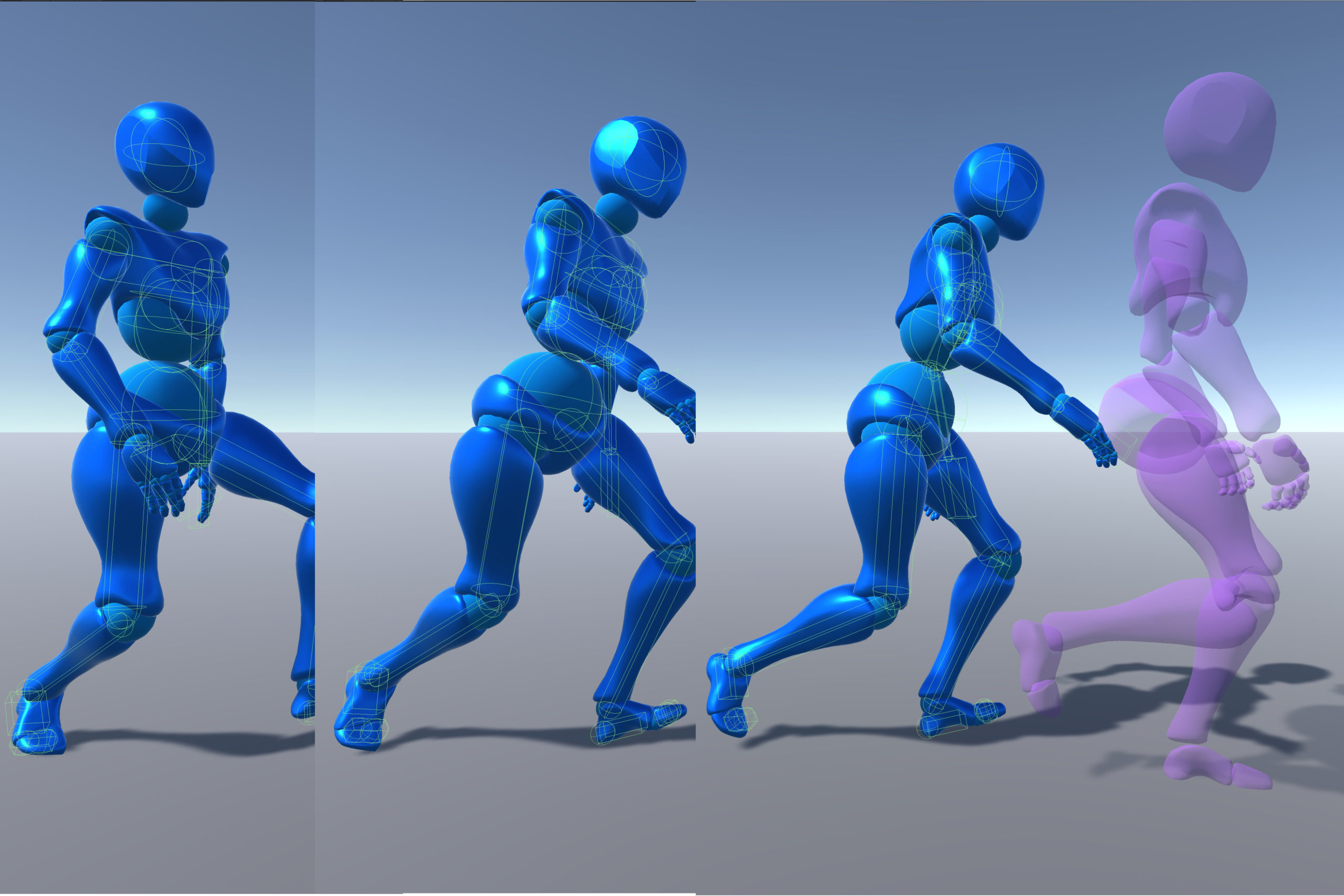

The main challenge to realize this vision is that current techniques for interactive character animation are designed for video games, and do not offer the subtle multi-modal interaction that VR users spontaneously expect. The main goal of this project is to explore different techniques for interactive character animation that help creates interactive characters that can engage in a more compelling way with players. To achieve this goal, we use a combination of research techniques derived from computer graphics, machine learning and cognitive psychology.

Related Publications

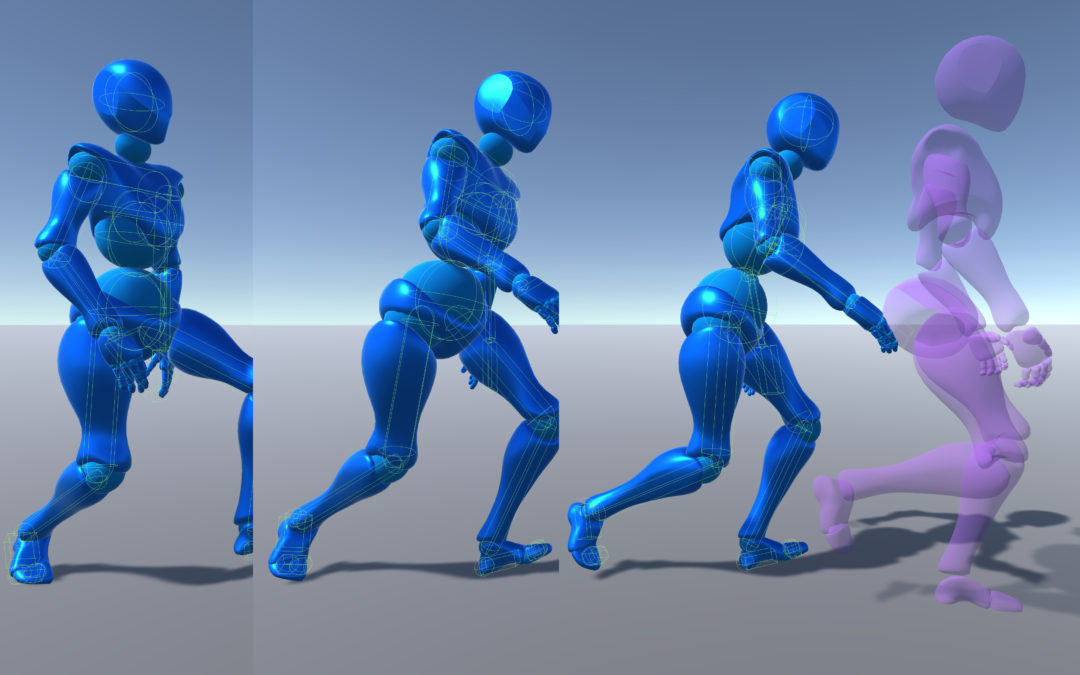

Llobera J, Charbonnier C. Physics-based character animation and human motor control, Phys Life Rev, 46:190-219, 2023.

Llobera J, Jacquat V, Calabrese C, Charbonnier C. Playing the mirror game in virtual reality with an autonomous character, Sci Rep, 12:21329, 2022.

PDF

Llobera J, Charbonnier C. Physics-based character animation for Virtual Reality, Open Access Tools and Libraries for Virtual Reality, IEEE VR Workshop, 2022 Best Open Source tool Award, March 2022.

PDF

Llobera J, Booth J, Charbonnier C. Physics-based character animation controllers for videogame and virtual reality production, 14th ACM SIGGRAPH Conference on Motion, Interaction and Games, November 2021.

PDF

Llobera J, Booth J, Charbonnier C. New Techniques on Interactive Character Animation, SIGGRAPH ’21: short course, ACM, New York, NY, USA, August 2021.

Llobera J, Charbonnier C. Interactive Characters for Virtual Reality Stories, ACM International Conference on Interactive Media Experiences (IMX \’21), ACM, New York, NY, USA, June 2021.

PDF