ABC-Space

ABC-Space

Attention, Behavior and Curiosity in SpaceProject Info

Start date:

September 2024

End date:

August 2028

Funding:

Swiss National Science Foundation (SNSF)

Coordinator:

Artanim

Summary

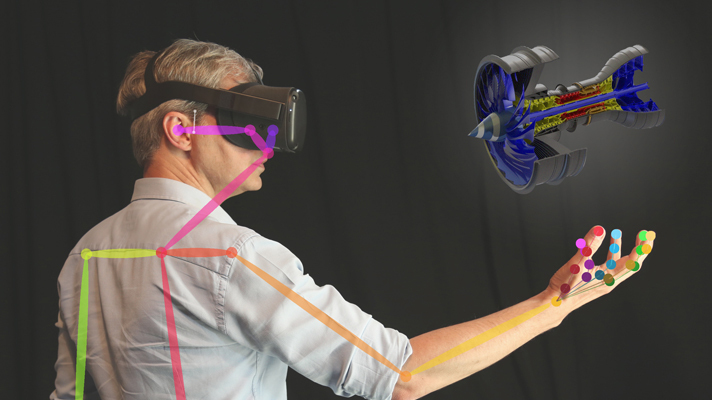

The aim of ABC-Space is to better understand how Attention and Curiosity deploy in Space, and their impact on Educational Virtual Reality (VR) experiences that use embodied social virtual characters. ABC-Space will also bring foundational insights into how social signals from interactive automated characters influence cognitive and motivational processes that guide attention and memory in VR.

Our results will provide new insights in VR science, education psychology, and cognitive science. These results will be achieved through a combination of novel experimental paradigms probing attention, motivation, and memory in VR. By the end of the project, ABC-Space will have contributed to the understanding of the relation between attention and curiosity, memory and learning, 3D space representations, as well as their interplay in fully immersive VR. This knowledge will have important implications both for VR science and for cognitive science and psychology. ABC-Space will thus pave the way to design a new kind of educational VR agent, expand current theoretical models of attention and 3D space representation in humans, and demonstrate their educational impact for applied purposes.

In this project, Artanim develops Physics-based controllers based on Deep Reinforcement to control virtual reality characters, produces the VR environments and contributes to the design of the behavioural experiments to validate their impact in terms of attention and learning.

Partners

Artanim

Physics-based interactive Virtual Reality characters capable of signaling and social cueing

University of Geneva – Laboratory for Behavioral Neurology and Imaging of Cognition (LABNIC)

Cognitive and affective processes governing human attention in space

University of Geneva – Educational Technologies (TECFA)

Models of space representation used to form cognitive maps, and their impact in educational VR experiences